Comparing the $1000+ Campaign Contributions of Each Front-Running Candidate for NYC Mayor in 2009, 2013, 2017, and 2021

Side-by-side view of each year's heatmap showing where the $1000+ donations came from.

One step of the project involved fixing incorrectly hyphenated zip codes in OpenRefine.

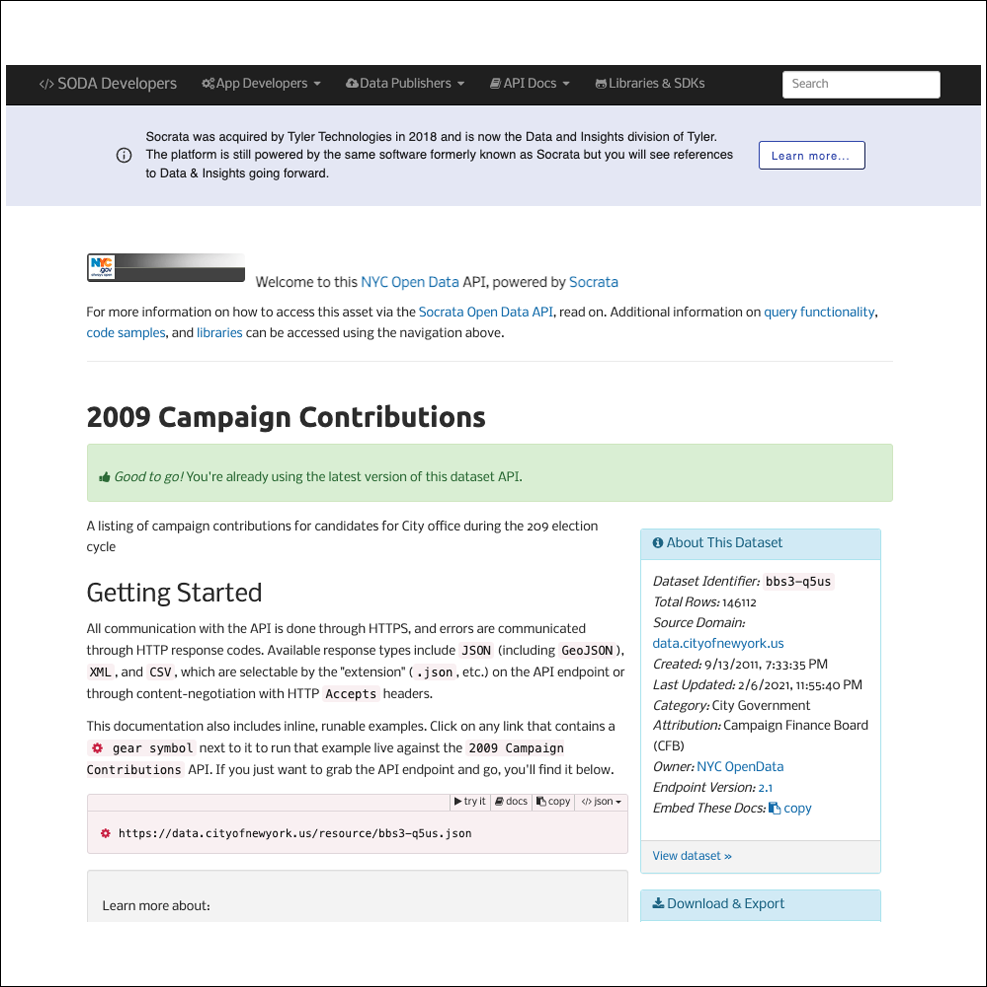

I used NYC Open Data's Socrata API to make API calls to gather my needed data. Each campaign year had its own API homepage.

(For the README file, code, and csv files click here.)

Executive Summary

For my final INFO-664 Programming for Cultural Heritage project, I analyzed campaign contributions made to each front-running candidate for NYC mayor in the years 2009, 2013, 2017, and 2021. These are the years in which Michael Bloomberg, Bill De Blasio (for two terms), and Eric Adams, respectively, were the frontrunners. First, I compared all contribution amounts of at least $0 to those just $1000 and up. Then, I heatmapped the donations of $1000 and up because I wanted to see where these higher levels of contributions came from.

The results showed that:

1) In 2009, Bloomberg was the only person to have contributed to his campaign. There were 90 contributions made all by Bloomberg himself. They ranged from $10,000 to $8,000,000 and totaled $108,375,000 altogether. Therefore, his heatmap shows what appears to be just one large circle made up of all 90 data points emitting from his Upper East Side zip code of 10075.

2) The data for 2013 front-runner De Blasio showed that all campaign contribution amounts of at least $0 that had zip code details provided came from 16,350 people and totaled $11,073,194. When looking just at contributions of at least $1000, there were 3,676 entries altogether in the original json file from the initial API call. But when looking into the json entries that actually had zip code information provided, we were left with 3,627 people. When totaling up the contribution amounts from this group, it totaled $9,000,472. His heatmap includes 3600 of these points. Twenty of the points were removed from the csv file via OpenRefine due to “NA” values that were probably the result of zip codes that had been recorded incorrectly and didn’t actually exist. The other seven points were incorrectly plotted data points that showed up in Europe and Asia.

3) The data for 2017 front-runner De Blasio showed that all campaign contribution amounts of at least $0 that had zip code details provided came from 18,947 people and totaled $6,737,843. When looking just at contributions of at least $1000, there were 1,981 entries altogether in the original json file from the initial API call. But when looking into the json entries that actually had zip code information provided, we were left with 1,970 people that had zip code information provided. When totaling up the contribution amounts from this group, it equalled $5,557,689. His heatmap includes 1,799 of these data points. Of the 1,970 people, 139 of the points were removed from the csv file via OpenRefine due to “NA” values, and another 32 were incorrectly plotted as being located at various positions overseas.

4) The data for 2021 front-runner Eric Adams showed that all campaign contribution amounts of at least $0 that had zip code details provided came from 6,181 and totaled $2,978,043. When just looking at contributions of at least $1000, there were 542 entries in the json file from the API call. But when looking into the json entries that actually had zip code information provided, we were left with 531 people that had zip code information provided. When totaling up the contribution amounts from this group, it totaled $1,681,057. His heatmap includes 494 of these points. Thirty-seven of the points were removed from the csv file via OpenRefine due to “NA” values that were probably the result of zip codes that had been recorded incorrectly and didn’t actually exist.

Tools Used

Programming languages: Python, R

Google Maps API (to geocode addresses to longitude, latitude)

OpenRefine (for data cleaning)

Mapbox (to create heatmaps)

Method

1) Using NYC Open Data API

First, in order to use the API, I had to sign up for their app token (which I would then pass via the “$$app_token parameter” in the URL when making the API calls).

2) Looping through paginated API results and creating a json file

To gather the initial data for each year, I used a python script that would loop through paginated data results. This is because the API only returns 1000 results at a time, and it was possible that the results were going to be in the several or tens of thousands. I also made sure that the script was returning my results in ascending order starting with the lowest “refno” just so there was some organization to my results. I used this script to output one json file that showed all campaign contributions at least $0 - this is due to negative “refund” values that exist in the database due to some complicated revolving-door situation that I did not look into - listing all campaign contributions made to each candidate that was at least $1000.

3) Converting the json file to a csv file

Then, I used another python script to take certain key-value pairs from the json file - such as name, city, state, zip code, and contribution amount - and write a csv file that organized the info into rows and columns. (However, during this process, there were some instances for the 2013, 2017, and 2021 data - not many, but a few - of the key-value pair for zip code not existing in the json file. So when the csv file was written, there were slightly fewer rows compared to the full amount of json entries in the original file made from the API call.) Specifically:

For the 2013 json file, there were 3,676 entries, but once the csv file was created, there were 3,627 rows, meaning 49 entries did not contain a “zip” key in the json file

For the 2017 json file, there were 1,981 entries, but the csv file that was created had 1,970 rows, meaning 11 entries did not have a “zip” key in the json file

For the 2021 json file, there were 542 entries, but the csv file that was created had 531 rows, meaning 11 entries did not have a “zip” key in the json file

4) Turning on missing leading zeros from certain zip codes in Google Sheets and exporting as an excel file

Then, I brought the csv file into Google sheets, and made sure all the zip codes with leading zeros appeared with their full five digits and then exported the file as an excel file (because if you export it as a csv, the leading zeros disappear again).

5) Geocoding zip codes to latitudes and longitudes

Then, I used an R script to geocode the zip codes into latitudes and longitudes using the Google Maps API’s geocode function (part of its ggmap library) and wrote the results to a csv file. (During this process, one time I encountered the error “Error in if (location == "") return(return_failed_geocode(output)) : missing value where TRUE/FALSE needed,” while trying to geocode the 2017 data. And after scrolling through the spreadsheet, I hypothesized that the handful of zip codes that were hyphenated - and done so with an incorrect number of three digits instead of four - may be causing the issues, so I went into OpenRefine (Fig. 1) and made all the zip codes just five digits long.

6) Removing rows with “NA” values

Then, I brought the csv file into OpenRefine, removed any rows that had “NA” values, and exported a new csv file. (I’m assuming these “NA” values were ones that were incorrectly entered zip codes that did not actually exist.)

7) Creating the heatmap

Then, to create the heatmap, I brought the dataset into Mapbox. I exported it as a new tileset, created a new “Style” (choosing “Monochrome” because I felt it was the cleanest to look at for a heatmap), and chose my dataset as the layer to place over the map. I then chose “Heatmap” as the style and changed the “Radius” from the default 30 px to 20 px.